Shaded relief maps color elevation in a way that it looks as if the terrain is cast in a low-angle light, which creates bright spots and shadows. The aesthetic styling creates an almost photographic illusion, which is easy to grasp to understand the variation in terrain. It is important to note that this style is truly an illusion as the light is often physically inaccurate and the elevation is usually exaggerated to increase contrast.

In this post, we'll use the ASCII DEM referenced in the previous post to create another grid, which represents a shaded relief version of the terrain in NumPy. You can download this DEM here:

https://geospatialpython.googlecode.com/files/myGrid.asc

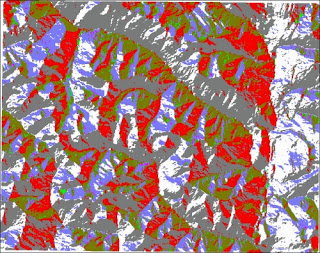

If we color the DEM to visualize the source data we get the following image which we used in the previous post:

This terrain is quite dynamic so we won't need to exaggerate the elevation; however, the script has a variable called z, which can be increased from 1.0 to scale the elevation up.

After we define all the variables including input and output file names, you'll see the header parser based on the linecache module, which also uses a python list comprehension to loop and parse the lines that are then split from a list into the six variables. We also create a y cell size called ycell, which is just the inverse of the cell size. If we don't do this, the resulting grid will be transposed.

Note we define file names for slope and aspect grids, which are two intermediate products that are combined to create the final product. These intermediate grids are output as well, just to take a look at the process. They can also serve as inputs to other types of products.

This script uses a three by three windowing method to scan the image and smooth out the center value in these mini grids. But because we are using NumPy, we can process the entire array at once, as opposed to a lengthy series of nested loops. This technique is based on the excellent work of a developer called Michal Migurski, who implemented the clever NumPy version of Matthew Perry's C++ implementation, which served as the basis for the DEM tools in the GDAL suite.

After the slope and aspect are calculated, they are used to output the shaded relief. Finally, everything is saved to disk from NumPy. In the savetxt() method we specify a 4 integer format string, as the peak elevations are several thousand feet:

from linecache import getline

import numpy as np

# File name of ASCII digital elevation model

source = "dem.asc"

# File name of the slope grid

slopegrid = "slope.asc"

# File name of the aspect grid

aspectgrid = "aspect.asc"

# Output file name for shaded relief

shadegrid = "relief.asc"

## Shaded elevation parameters

# Sun direction

azimuth=315.0

# Sun angle

altitude=45.0

# Elevation exageration

z=1.0

# Resolution

scale=1.0

# No data value for output

NODATA = -9999

# Needed for numpy conversions

deg2rad = 3.141592653589793 / 180.0

rad2deg = 180.0 / 3.141592653589793

# Parse the header using a loop and

# the built-in linecache module

hdr = [getline(source, i) for i in range(1,7)]

values = [float(h.split(" ")[-1].strip()) \

for h in hdr]

cols,rows,lx,ly,cell,nd = values

xres = cell

yres = cell * -1

# Load the dem into a numpy array

arr = np.loadtxt(source, skiprows=6)

# Exclude 2 pixels around the edges which are usually NODATA.

# Also set up structure for a 3x3 window to process the slope

# throughout the grid

window = []

for row in range(3):

for col in range(3):

window.append(arr[row:(row + arr.shape[0] - 2), \

col:(col + arr.shape[1] - 2)])

# Process each cell

x = ((z * window[0] + z * window[3] + z * \

window[3] + z * window[6]) - \

(z * window[2] + z * window[5] + z * \

window[5] + z * window[8])) / (8.0 * xres * scale);

y = ((z * window[6] + z * window[7] + z * window[7] + \

z * window[8]) - (z * window[0] + z * window[1] + \

z * window[1] + z * window[2])) / (8.0 * yres * scale);

# Calculate slope

slope = 90.0 - np.arctan(np.sqrt(x*x + y*y)) * rad2deg

# Calculate aspect

aspect = np.arctan2(x, y)

# Calculate the shaded relief

shaded = np.sin(altitude * deg2rad) * np.sin(slope * deg2rad) \

+ np.cos(altitude * deg2rad) * np.cos(slope * deg2rad) \

* np.cos((azimuth - 90.0) * deg2rad - aspect);

shaded = shaded * 255

# Rebuild the new header

header = "ncols %s\n" % shaded.shape[1]

header += "nrows %s\n" % shaded.shape[0]

header += "xllcorner %s\n" % \

(lx + (cell * (cols - shaded.shape[1])))

header += "yllcorner %s\n" % \

(ly + (cell * (rows - shaded.shape[0])))

header += "cellsize %s\n" % cell

header += "NODATA_value %s\n" % NODATA

# Set no-data values

for pane in window:

slope[pane == nd] = NODATA

aspect[pane == nd] = NODATA

shaded[pane == nd] = NODATA

# Open the output file, add the header, save the slope grid

with open(slopegrid, "wb") as f:

f.write(header)

np.savetxt(f, slope, fmt="%4i")

# Open the output file, add the header, save the slope grid

with open(aspectgrid, "wb") as f:

f.write(header)

np.savetxt(f, aspect, fmt="%4i")

# Open the output file, add the header, save the array

with open(shadegrid, "wb") as f:

f.write(header)

np.savetxt(f, shaded, fmt="%4i")

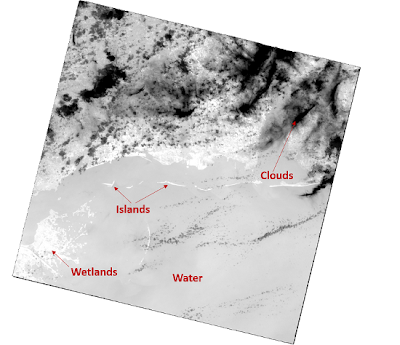

If we load the output grid into QGIS and specify the styling to stretch the image to the min and max, we see the following image. You can also open the image in the FWTools OpenEV application discussed in the Installing GDAL section in Chapter 4, Geospatial Python Toolbox, which will automatically stretch the image for optimal viewing.

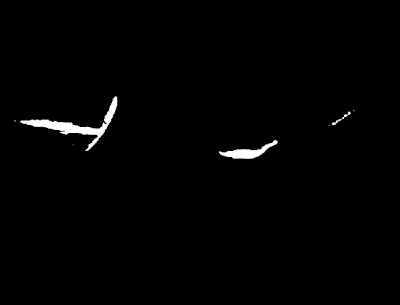

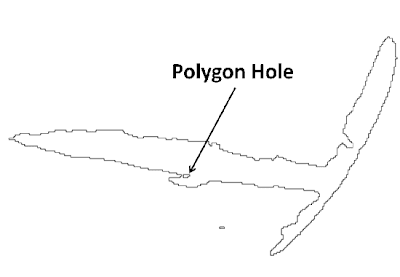

As you can see, the preceding image is much easier to comprehend than the original pseudo-color representation we examined originally. Next, let's look at the slope raster used to create the shaded relief:The slope shows the gradual decline in elevation from high points to low points in all directions of the data set. Slope is an especially useful input for many types of hydrology models. The aspect shows the maximum rate of downslope change from one cell to its neighbors. If you compare the aspect image to the shaded relief image you can see the red and gray values of the aspect image correspond to shadows in the shaded relief. So the slope is primarily responsible for turning the DEM into a terrain relief while the aspect is responsible for the shading.

In the next post, we'll use the elevation data to create elevation contours!